Aligning Values in AI Applications

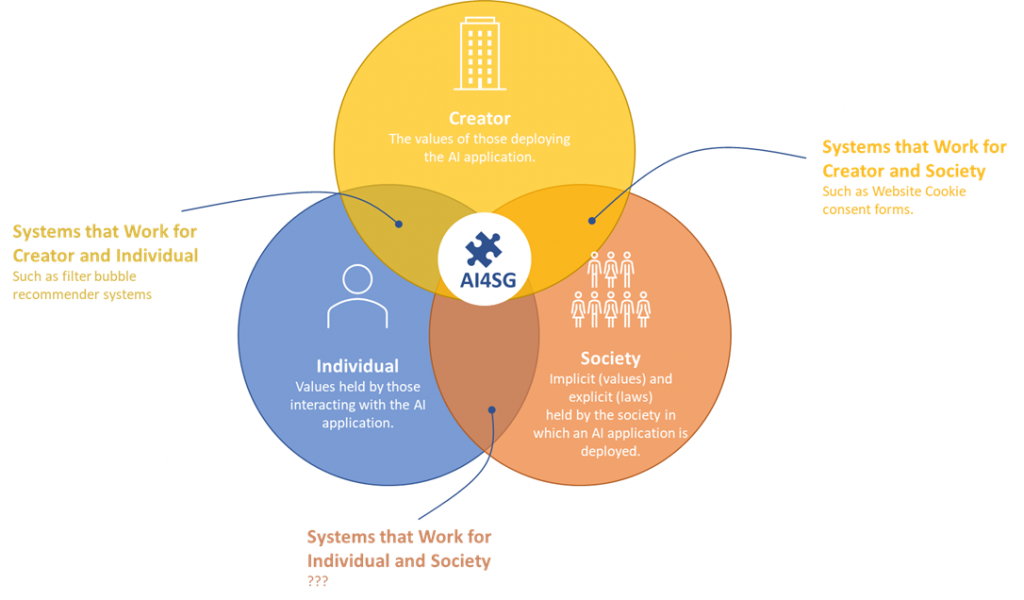

Artificial Intelligence (AI) is everywhere. When you use the internet or computers in general you interact (sometimes unknowingly) with dozens of systems that use some form of AI. Ad networks serve you personalized ads when you browse the web, your phone corrects your spelling, your computer’s operating system decides what programs gets priority to computational resources. Some of these systems are nice, some of these systems are less nice. I believe the most successful AI applications have values aligned for three parties:

- The person interacting with the system,

- The creator of the system,

- The society in which the owner and the interacter live.

All AI applications are to a greater or lesser extent aligned along these three different sets of values. I’ll briefly elaborate on what the sets of values are, give examples of AI applications that have mismatches and conclude with examples where all values are in line.

The three sets of Values

Values of those interacting with the system

Different types of people interact with AI systems and AI systems affect them differently. People differ on their level of understanding and the values they have. You can be sure that someone interacting with your AI application has some set of values, ranging from very basic, such as finding it important to be able to provide for themselves and/or their family. Or more complex, such as finding it important to have a broad frame of reference. Either way, what your system provides them will have an impact on the extent to which they can pursue their values.

In addition, people may interact with a system more or less voluntarily. The do not necessarily choose to interact with a system, or may not even consciously interact with it. A bank developing an AI application that automates the assessment of loan applications forces both customers and employees to interact with it. People may not realize that recommender systems influence what content they present is influenced by their past viewing behavior.

An AI system might impact their values. The loan application assessment system decided whether or not people will get their loans. The recommender system influences what they are exposed to.

Values of the Creator of the System

Any party that deploys an AI application for whatever reason, be it to serve their customer base or to improve internal processes, does so based on a set of values. These might be very trivial, such as increasing revenue or reducing costs. They might be more altruistic such as not wasting resources. Or they might even be very normative, such as promoting a healthy lifestyle of improving quality of living. The AI application has the goal of making the party that deploys the system reach its goals that come from its values. For recommender systems specifically, research has started on systems that pursue multiple objectives at the same time. A system can increase the number of videos someone watches or purchases they make and at the same time provide a diverse, broad frame of reference.

Values of the Society in Which an Application is Deployed

Now both the party deploying a system and the individuals that interact with the system are part of a society. This can be on a national level, but more and more often this is in a global society. Now a society also has values. Laws and legislation capture or formalize part of these values, while other values are left a bit more implicit. For example Dutch laws prescribe what what advertisers can and cannot claim to protect the Dutch from deception. The Reclame Code Commissie, a Dutch government watch dog controls this. On the other hand, ethical codes of conduct stipulate how journalists or editorial boards should provide their information when reporting. While not in line with their ethical codes, there are no laws that prevent them from not following this code.

Mismatches

There are plenty of examples where AI is unsuccessful because not all values are aligned. The illustration above provides examples of AI applications for which there is only a partial match between values.

Systems that work for the creator and the individual.

Recommender systems are examples of AI applications that are in line with the values of the individual and the deploying party. Personally I’m quite happy with YouTube and the nice cycling filter bubble it created for me. I can endlessly watch highly relevant and enjoyable cycling videos, fully in line with my personal preferences. I watch videos, YouTube can show me ads. A win-win situation! However, from a societal point of view, it is not ideal that I spend all my time watching cycling videos, when I could also be watching the news or other topics and develop myself more. Would society and myself not benefit from me spending my time watching something else?

Systems that work for the creator and society.

There are also applications that are in line with the values of the deploying party and society. While not strictly an AI application, since a couple of years all websites (should) ask us for consent for tracking our behavior through cookies. This is fully in line with the values of the deploying party: they do not risk paying fines and they are allowed to use our data. It is also in line with the values we hold as society: every member of society has ownership of their data. But is it in line with our values as citizens? Do we want to have this ownership? Do we want to be forced to provide or withhold consent? I personally value effortlessly browsing the internet, and I prefer not having to click over this additional control over my privacy. This is not at all met by how laws are implemented.

Systems that work for society and the individual.

I haven’t really been able to come up with examples of these, which kind of makes sense because what party/organisation would deploy a system that is in line with society and the individuals but not with its own values? There could be examples out there, if we search for a bit, but I guess that these are not examples that are around for a long time, since at some point the deploying party will pull the plug on these.

When all three sets of values are aligned

During my PhD we tried to achieve alignment as well. In our work on choice overload in recommender systems we designed and tested a diversification algorithm to reduce choice difficulty. When we present users a set of recommendations, we force them to make a decision. And this decision can be easier or more difficult. Our aim was to create lists of recommendations that were easy to choose from, while maintaining or increasing the level of satisfaction. Through a number of studies we showed the effects of increasing the diversity of recommendations sets, while keeping predicted relevance equal. The idea is that users should have satisfactory results, but choosing from those should not cost unnecessary choice difficulty. We did however leave out societal values.

Some systems have all three sets of values aligned. One name for these systems is AI for Social Good (AI4SG) and this movement is getting more and more momentum, through academic workshops, journals, platforms, LinkedIn groups, blogs. Maybe not surprisingly, mostly NGO’s are active in developing AI4SG, for example by using machine learning to combat poaching.

So what should we aim for and how should we get there? I am not entirely sure, but I am 100% convinced that whatever we do in AI, we will have to start assessing our initiatives along these three sets of values. You have an idea that will increase your firm’s revenue with 20%? Cool! Now go and check how that initiative coincides with the people that will be interacting with your system and how society will look upon your idea. Are you sure that whatever your idea will cause is in line with your own values, the values of the people interacting with it and the values held by the society in which you will deploy your system? Then go for it! Otherwise, refine and revise. I personally will ensure my Data Science team in Obvion will be making these assessments for all AI initiatives. I do not think it’s realistic to never have any mismatch, but the least we can do is minimize the probability for any unconscious, unintended mismatches.

Aligning Values in Practice

In my current role we are taking our first steps in AI. In an earlier post I described how I will try to do this in a value-sensitive way, by developing and deploying AI while advancing along two axes. The first axis along which we will grow is that of decision support versus automating. We will start with models that provide information for making decisions, rather than automatically making decisions. A model predicting the number of loan applications can initially be used by managers to ensure enough employees are available to process them. This same model can later automatically up- or downsize capacity. This however only takes place after having experienced how predictions can be used. Secondly we will start with AI that affects our internal processes and stakeholders and then move outwards, to AI that affects advisors and customers. This also to ensure that we can actually check to what extent our efforts are in line with the values of those affected by our AI.

Leave a Reply